Prototyping a vocoder with GStreamer

A vocoder is basically a tool to turn any sound into something which can “speak”, some examples can be seen in this Collin's Lab episode about MIDI, or in this classic from the Kraftwerk (the vocoder effect starts at 1:06):

Whether you are planning to ask for a ransom, or joining the Daft Punk, or taking a time travel to the '70s: a vocoder is always handy.

The vocoded audio is built starting from two audio sources, a formant (also called a modulator) and a carrier; in high-level terms the formant can be imagined as the original content and presentation of a message, and the carrier as some parameters that can be used to build an alternative presentation of the content, this is better described in the What is a Vocoder? article.

Very often vocoding mechanisms assume that the formant is on one channel of a stereo track (e.g. the right channel), and the carrier is on another channel (e.g. the left one).

A “build a vocoder” problem can be decomposed into these independent sub-problems:

- Get audio from a microphone, play audio through the sound card.

- Apply a real time transformation to an audio stream.

- Get two mono audio sources (one for the formant, and one for the carrier) and mix them into a single stereo audio stream.

It is possible to solve these problems quite easily on GNU/Linux with GStreamer.

Step 1: input and output audio with GStreamer

This is trivial, use autoaudiosrc and autoaudiosink and adjust the details of the sound paths using the sound server available on the platform in use (e.g. pulseaudio):

$ gst-launch-1.0 autoaudiosrc ! autoaudiosink

Step 2: apply a real-time audio effect with GStreamer

Audio effects in GStreamer can be applied by just putting the right element in the pipeline, an example with the audioecho plugin from the audiofx collection:

$ gst-launch-1.0 autoaudiosrc ! audioecho delay=500000000 intensity=0.6 feedback=0.4 ! autoaudiosink

More complex effects can be provided by LADSPA plugins.

Some of these plugins are provided by tap-plugins and they are already packaged for the most common distributions.

LADSPA plugins are not specific to GStreamer, they just have to be in a location where they can be found by GStreamer (e.g. under /usr/lib/ladspa); they can be listed with:

$ gst-inspect-1.0 ladspa

An example pipeline with the pitch plugin:

$ gst-launch-1.0 autoaudiosrc ! audioconvert ! ladspa-tap-pitch-so-tap-pitch rate-shift=70 ! autoaudiosink

A funny one to try is also ladspa-tap-reflector-so-tap-reflector.

Step 2: mixing two audio channels with GStreamer

In order to mix two separate audio sources into a single stereo stream with a left and a right channel, the interleave element can be used:

$ gst-launch-1.0 -v interleave name=i ! \ capssetter caps="audio/x-raw,channels=2,channel-mask=(bitmask)0x3" ! \ audioconvert ! wavenc ! filesink location=audio-interleave-test.wav \ audiotestsrc wave=0 num-buffers=100 ! audioconvert ! "audio/x-raw,channels=1" ! queue ! i.sink_0 \ audiotestsrc wave=2 num-buffers=100 ! audioconvert ! "audio/x-raw,channels=1" ! queue ! i.sink_1

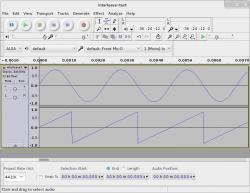

The pipeline above creates an audio file called audio-interleave-test.wav with two different waves in the two channels:

The only thing to note is that the channel-mask property has to be specified in oder to have a properly built stereo stream; this might be achieved in a more readable way in code by setting the channel-positions property of the interleave element.

Wrapping it up

UPDATE: the latest version of gst-vocoder.sh uses the alsamidisrc plugin and the vocoder_1337.so from the swh-plugins package.

For a vocoder prototype the carrier can very well be taken from the audiotestsrc element, a sawtooth wave is OK as a simple carrier (maybe mixed with some white noise, the adder plugin can be used for that); the formant can be taken from a microphone.

A vocoder LADSPA plugin is available in the lmms package, but sometimes it is installed in a non-standard location (see Debian bug #758888); if that is the case the shared object must be made available under /usr/lib/ladspa in order for GStreamer to pick it up; a symlink is enough, e.g.:

$ ln -s /usr/lib/x86_64-linux-gnu/lmms/ladspa/vocoder_1337.so /usr/lib/ladspa

After that, the vocoder plugin becomes available to GStreamer as ladspa-vocoder-1337-so-vocoder-lmms.

Finally, here is a possible pipeline implementing a vocoder based on GStreamer:

#!/bin/sh

FORMANT="autoaudiosrc"

CARRIER_PARAMS="audiotestsrc wave=2 ! carrier. audiotestsrc wave=5 ! carrier."

CARRIER="adder name=carrier"

SINK="autoaudiosink"

gst-launch-1.0 -v interleave name=i ! \

capssetter caps="audio/x-raw,channels=2,channel-mask=(bitmask)0x3" ! audioconvert ! audioresample ! \

ladspa-vocoder-1337-so-vocoder-lmms \

number-of-bands=16 \

left-right=0 \

band-1-level=1 \

band-2-level=1 \

band-3-level=1 \

band-4-level=1 \

band-5-level=1 \

band-6-level=1 \

band-7-level=1 \

band-8-level=1 \

band-9-level=1 \

band-10-level=1 \

band-11-level=1 \

band-12-level=1 \

band-13-level=1 \

band-14-level=1 \

band-15-level=1 \

band-16-level=1 \

! $SINK \

$FORMANT ! audioconvert ! audioresample ! audio/x-raw, rate=44100, format=S16LE, channels=1 ! queue ! i.sink_0 \

$CARRIER ! audioconvert ! audioresample ! audio/x-raw, rate=44100, format=S16LE, channels=1 ! queue ! i.sink_1 \

$CARRIER_PARAMS

Extra goodies: using MIDI to control the carrier

From time to time a discussion starts about how to read from —or write to— MIDI devices with GStreamer, and yet no alsamidisrc or alsamidisink elements are here.

It is already possible to use a MIDI file as a vocoder carrier using the midiparse and wildmidi elements, but being able to change the notes interactively would be more fun.

To work around the lack of a native mechanism for getting MIDI events into GStreamer, an audio loopback device can be used.

Alsa audio loopback device

Load the alsa module:

$ sudo modprobe snd-aloop

Now it is possible to write to a loopback device named hw:Loopback,0,0:

$ gst-launch-1.0 audiotestsrc ! audioconvert ! alsasink device="hw:Loopback,0,0"

and read from the correspondent hw:Loopback,1,0:

$ gst-launch-1.0 alsasrc device="hw:Loopback,1,0" ! audioconvert ! autoaudiosink

Getting synthesized sounds into GStreamer

The loopback audio device can be used with a software MIDI synthesizer like Timidity++ or Fluidsynth.

A possible command to hook up the sequencer to the loopback audio device is:

$ timidity -Os -o "hw:Loopback,0,0" -iA --sequencer-ports 1

Which also prints the MIDI port to connect the MIDI input device to, e.g.:

Requested buffer size 32768, fragment size 8192 ALSA pcm 'hw:Loopback,0,0' set buffer size 32768, period size 8192 bytes TiMidity starting in ALSA server mode Opening sequencer port: 129:0

A virtual piano can be used as a MIDI input device, for example vkeybd, taking care to disable key auto-repeat and remembering to connect it to the right MIDI port:

$ xset -r; vkeybd --device alsa --addr 129:0; xset r on

Now the notes can be heard on the output loopback device:

$ gst-launch-1.0 alsasrc device="hw:Loopback,1,0" ! audioconvert ! autoaudiosink

Although this contraption is OK for a vocoder prototype, it is not great for playing live music because some noticeable latency is introduced, so an alsamidisrc to get messages directly from the MIDI input device would still make sense.

Maybe the code for these MIDI elements can be based on aMIDIcat which BTW is a nifty little tool to automate MIDI processing.

A vocoder with an interactive carrier

At last, the pipeline for the vocoder with the interactively generated carrier would look like this:

#!/bin/sh

FORMANT="autoaudiosrc"

# In order to have a MIDI carrier use the alsa loopback device and Timidity++ like this:

#

# $ sudo modprobe snd-aloop

# $ timidity -Os -o "hw:Loopback,0,0" -iA --sequencer-ports 1

#

# then connect to Timidity++ with a MIDI input device, e.g.:

#

# $ xset -r

# $ vkeybd --device alsa --addr 129:0

# $ xset r on

#

# and have a lot of fun vocoding the crap out of your formant channel

CARRIER_TONE="alsasrc device=hw:Loopback,1,0 ! audioconvert"

# Add some white noise to the carrier

CARRIER_PARAMS="$CARRIER_TONE ! carrier. audiotestsrc wave=5 volume=0.1 ! carrier."

CARRIER="adder name=carrier"

SINK="autoaudiosink"

gst-launch-1.0 -v interleave name=i ! \

capssetter caps="audio/x-raw,channels=2,channel-mask=(bitmask)0x3" ! audioconvert ! audioresample ! \

ladspa-vocoder-1337-so-vocoder-lmms \

number-of-bands=16 \

left-right=0 \

band-1-level=1 \

band-2-level=1 \

band-3-level=1 \

band-4-level=1 \

band-5-level=1 \

band-6-level=1 \

band-7-level=1 \

band-8-level=1 \

band-9-level=1 \

band-10-level=1 \

band-11-level=1 \

band-12-level=1 \

band-13-level=1 \

band-14-level=1 \

band-15-level=1 \

band-16-level=1 \

! $SINK \

$FORMANT ! audioconvert ! audioresample ! audio/x-raw, rate=44100, format=S16LE, channels=1 ! queue ! i.sink_0 \

$CARRIER ! audioconvert ! audioresample ! audio/x-raw, rate=44100, format=S16LE, channels=1 ! queue ! i.sink_1 \

$CARRIER_PARAMS

Here is what it sounds like:

Other ways of doing it

An alternative way to apply the vocoder LADSPA plugin can be through pulseaudio; this might be more transparent to applications, but it looks less portable and also less flexible for a final product (how to control the vocoder plugin properties interactively?).

An example with the non-interactive carrier:

Load the vocoder LADSPA plugin in pulseaudio:

#!/bin/sh # Get the master device with: # # $ pacmd list-sinks | grep "name:" # MASTER=alsa_output.pci-0000_00_07.0.analog-surround-40 pacmd load-module module-ladspa-sink \ sink_name=vocoder_sink \ master=$MASTER \ plugin=vocoder_1337 \ label=vocoder-lmms \ control=16,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1

Use the newly defined pulseaudio sink named

vocoder_sinkwith GStreamer to feed vocoder plugin:#!/bin/sh FORMANT="autoaudiosrc" CARRIER="audiotestsrc wave=2" SINK="pulsesink device=vocoder_sink" gst-launch-1.0 -v interleave name=i ! \ capssetter caps="audio/x-raw,channels=2,channel-mask=(bitmask)0x3" ! audioconvert ! audioresample \ ! $SINK \ $FORMANT ! audioconvert ! audioresample ! audio/x-raw, rate=44100, format=S16LE, channels=1 ! queue ! i.sink_0 \ $CARRIER ! audioconvert ! audioresample ! audio/x-raw, rate=44100, format=S16LE, channels=1 ! queue ! i.sink_1

I am sure that JACK can be used in a similar way too, but I don't know the details.

![Valida il feed RSS [RSS Valido]](https://ao2.it/sites/default/files/valid-rss-rogers.png)

Commenti

Hi Antonio, I don't know if

Hi Antonio,

I don't know if you tested it, but the effect is very nice as well:

https://acassis.wordpress.com/2015/02/28/converting-audio-to-midi-or-dec...

It converts audio to MIDI format. In few seconds your brain can to adapt and understand the "mid voice".

[]'s, Alan

Hi Alan, I'll give that a

Hi Alan,

I'll give that a try sooner or later, thanks for the remainder.

Take care, Antonio

Awesome! You linked here from

Awesome! You linked here from my blog a while ago during a time when I was ignoring (not intentionally) my comments. I recently went back through old comments and found this! I'd love to learn more about using gstreamer programmatically from C/C++. I'm going to read through this later on as it might give me a basic understanding. Do you know of any other newbie friendly articles I could use to get acquainted?

Hi Cliff, in this blog post I

Hi Cliff, in this blog post I only used GStreamer from the command line.

If you are interested in using GStreamer from a program, you can get started by looking at some examples I wrote:

If you want to write GStreamer elements instead, the best place to look at is the code of the GStreamer plugins themselves.

One I wrote is gst-am7xxxsink.

Invia nuovo commento